Facebook makes the case that AI can work

Moderation on Facebook has been one of the biggest questions lawmakers find themselves asking aloud this year: can the social media company control its own platform? Evidence has pointed to no, almost repeatedly, with some regulators responding with proposals for harsh laws with penalties for not moderating the platform.

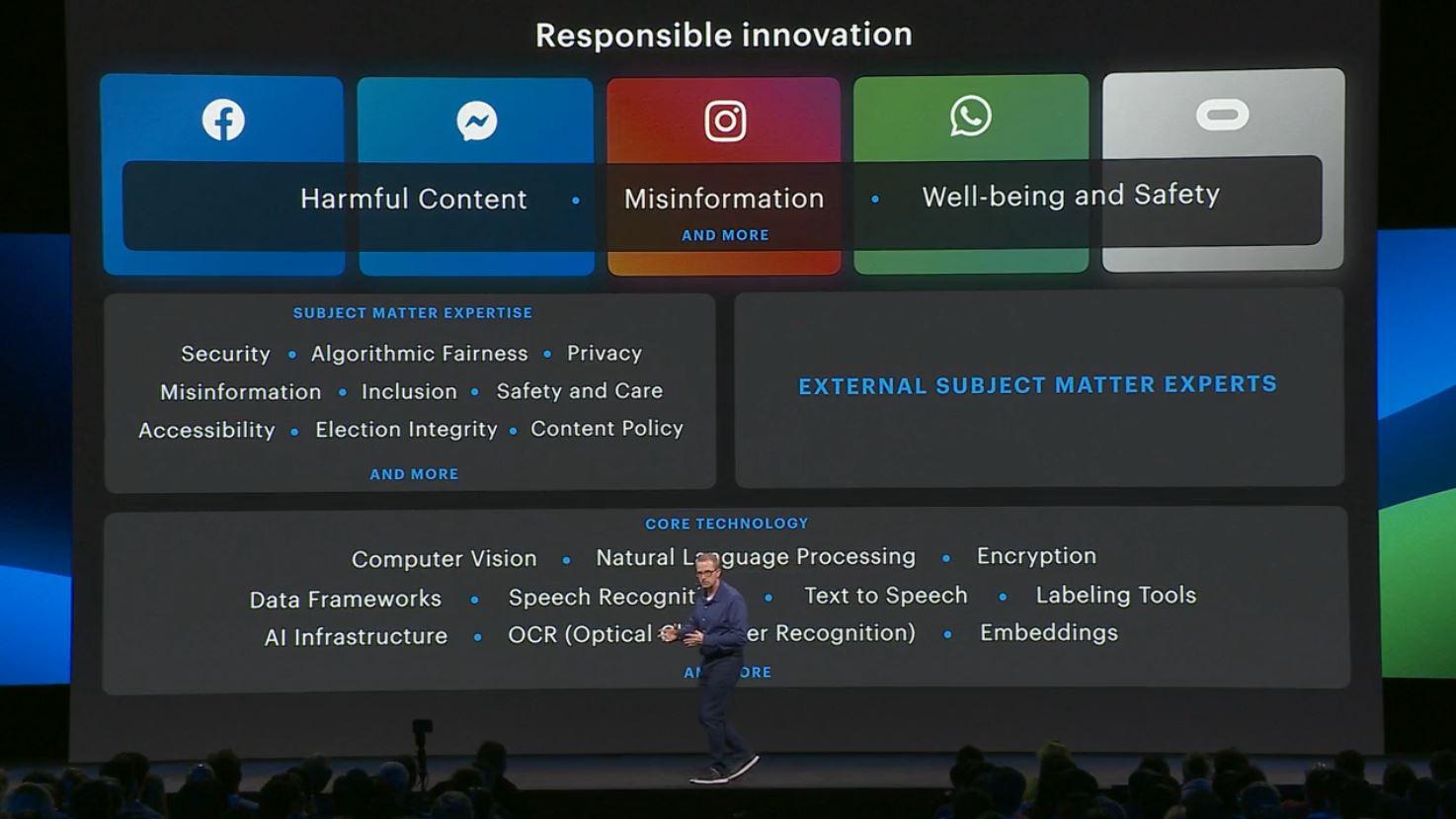

Facebook was on the defensive at F8, with its second day on Wednesday, focusing heavily on its use of artificial intelligence to understand what people are posting and police it automatically.

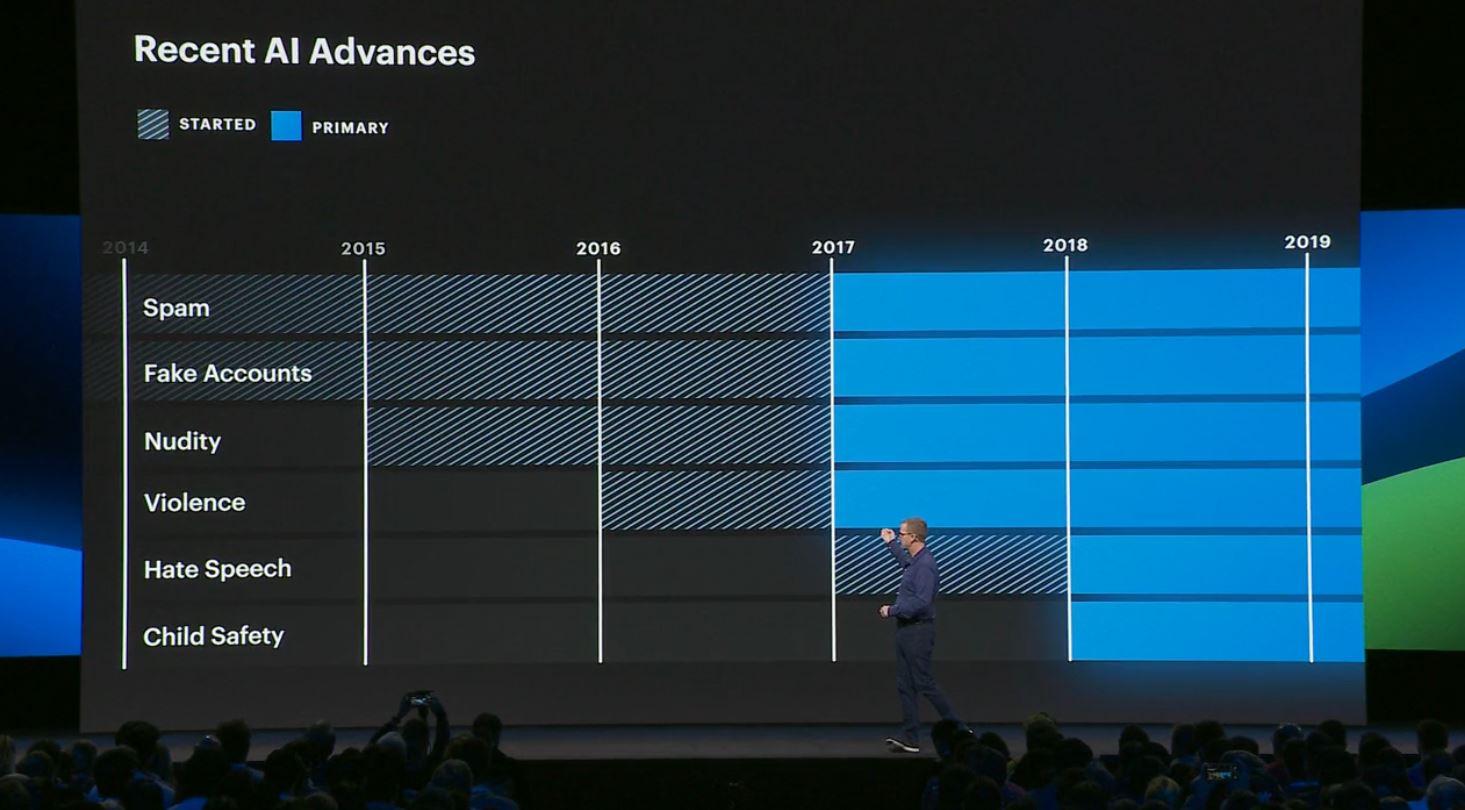

The company's CTO, Mike Schroepfer, provided a series of examples to argue the case that its AI is actually hitting many of its goals, and said that in a number of categories, AI is responsible for "more than fifty percent of takedowns" of content. That includes spam, fake accounts, nudity, violence, hate speech and child safety, the majority of which only began being automated in 2018.

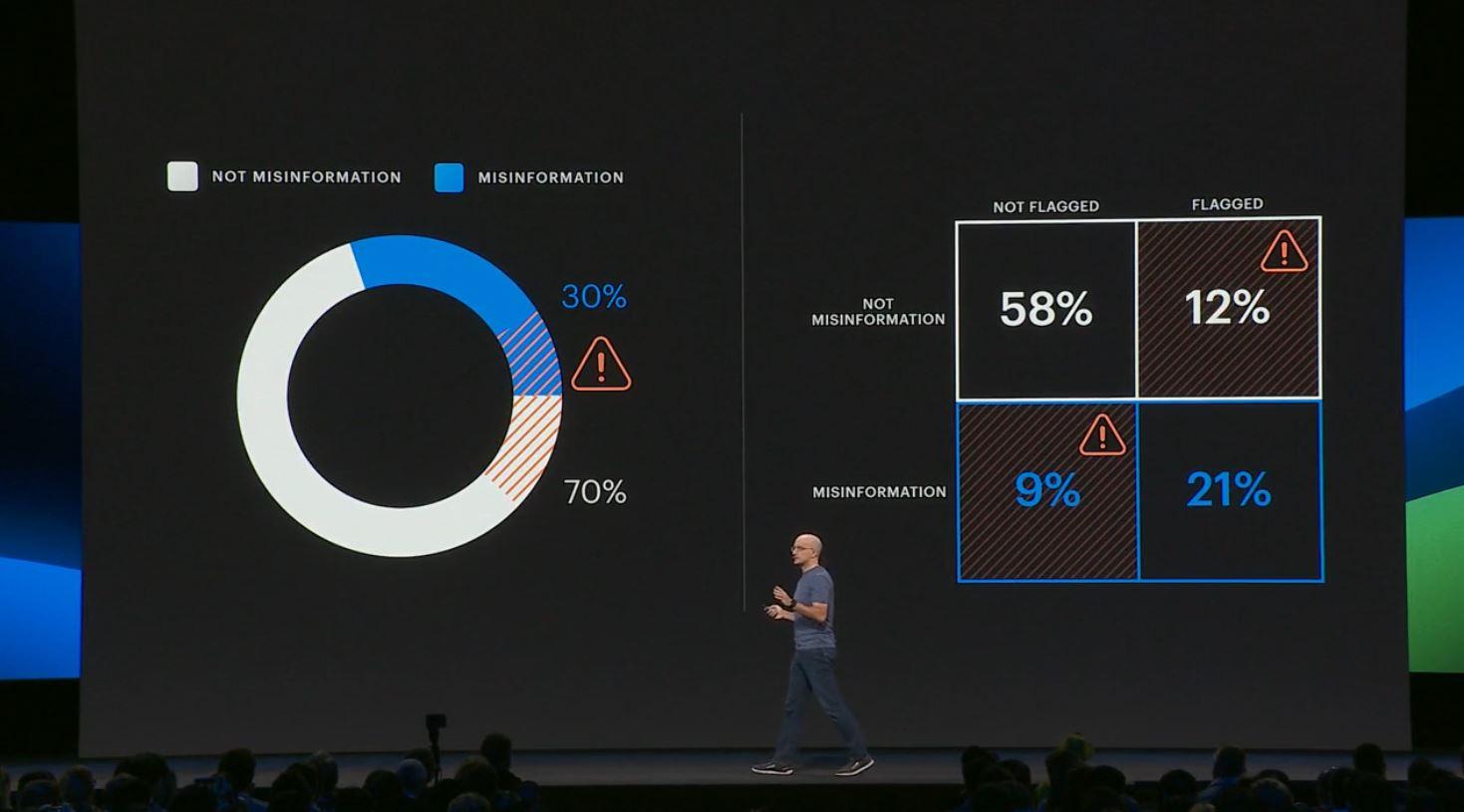

And yet, Facebook has failed repeatedly to take action in many cases, or bungled the ones that it intervened in. When Schroepfer zoomed out and showed the problems Facebook is grappling with, from election integrity to hate speech, it's easy to see why: the breadth of the problem is utterly overwhelming at a scale of two billion users, no matter what you do.

Across the board, the company's tooling appears to have made leaps and bounds forward. It showed demonstrations of how it's able to accurately identify gender, skin tone and other attributes from photos and videos, how AI can understand intent even in other languages, and the ways the company even processes speech for moderation.

These are all promising, impressive improvements in the artificial intelligence world, but by their own admission, all of them face adversaries that are gearing up to attack those models in new ways as quickly as they are often learning.

Wrapping up his introduction, Schroepfer that "our job is to use technology to make amazing experiences and connect people [..] while holding up our responsibility to prevent all the bad and harm. If we do that work well, we earn the right to build that future."

This remark was perhaps the most self-aware point made at the entire event, with a clear understanding of how much responsibility the company has, but contained that common, continued assumption that Facebookers always seem to project: we will be the ones building the future. As if it's impossible for anyone else to do, or that Facebook inherently has a right to do so.

What was apparent, in despite of that, was that Facebook wants to project a message that it's pushing forward in how it uses AI, and hopes to solve many of its problems with moderation through the use of automated tools in the near future. From the outside, it looks like a giant, AI-enabled game of whack-a-mole, but with time, the idea is that the computer can whack enough moles on its own that bad actors give up. Whether or not the latter actually happens remains to be seen.

After moderation, the company honed in on ethical design and using many of these AI improvements in different ways. Lade Obamehinti, an engineer on the Portal team, shared a powerful anecdote about Portal's smart camera and how it didn't work correctly for her, only zooming in on her white colleagues, ignoring her based on skin tone. She spoke about this inspiring her to build a set of tools for 'inclusive AI' and how the company better started understanding gender, skin tone and beyond, helping avoid shipping products into the world that diverse audiences can't use. It's an impressive testament to what AI can do if it's being wielded correctly, with everyone in mind.

The keynote for day two is genuinely worth watching because it's packed to the walls with insights on how Facebook uses AI and what problems it's focusing on, perhaps for the first time going beyond the "we'll solve it with AI" messaging it's sent before, instead actually detailing what it's up against and the various successes and failures it's had trying to deal with each problem.

Unlike yesterday, where Zuckerberg flunked a simple job of convincing the world that Facebook cares about privacy in a meaningful way, the message on moderation was clear: we're trying to solve this and we aren't doing nothing, it's just that our scale is unprecedented and we need time to get it right.

The question is whether or not it'll be allowed to decide that for itself in the end, or if regulators will do so instead.

Tab Dump

Qualcomm nets record $4B revenue from Apple's settlement

That's more than just a bit of pocket change, and will result in a cash payment next quarter. A huge deal for the company, which is poised to become the dominant (and essentially only) supplier of 5G modems.

Google now allows you to automatically delete location, app and search activity data

A huge step forward for your own privacy, and beating Facebook at a tool it promised it would build for its own service over a year ago. These new settings allow you to automatically scrub your account, ensuring data isn't just sitting around forever, and might actually help set a new standard in the industry: data doesn't need to last into eternity, and having it sitting around on a server is more of a liability.

Putin just signed a law to isolate the Russian internet

Seeking a button that would allow Russia to disconnect from the internet at large, filtered through the Kremlin's internet censors at all times, the country will see a China-style internet created from November 1 when this becomes law.

Facebook's settlement with the FTC may include a requirement for independent privacy audits and appointing Zuckerberg as 'compliance officer'

A quirky, but interesting proposal, but by appointing Zuckerberg as responsible for pushing forward the privacy agenda internally, this would also make Zuckerberg liable for any future violations.